by Robert Abela

- Introduction To Web Scraping Using

- Web Scraping Software

- Introduction To Web Scraping Pdf

- Best Web Scraping Tools

Nov 17, 2020 Web scraping or also known as web harvesting is a powerful tool that can help you collect data online and transfer the information in either an excel, CSV or JSON file to help you better understand the information you’ve gathered. Although web scraping can be done manually, this can be a long and tedious process. Web scraping is a computer software technique of extracting information from websites. This technique mostly focuses on the transformation of unstructured data (HTML format) on the web into structured data (database or spreadsheet). You can perform web scraping in various ways, including use of Google Docs to almost every programming language.

Jun 28, 2020 Web scraping is simply a technique to fetch data from a website. This can be carried out manually but it is usually faster and more efficient to automate the process. A script that parses an HTML site is called a scraper.

This article is part of a series that goes through all the steps needed to write a script that reads information from a website and save it locally. Make sure that all the pre-requisites (at the end of this article) are in place before continuing.

Installing Selenium and other requirements

Selenium setup requires two steps:

- Install the Selenium library using the command: pip install selenium

- Download the Selenium WebDriver for your browser (exact version)

Chrome drivers can be found on chromium.org

Scraper setup requires two commands:

- pip install requests

- pip install beautifulsoup4

Scraping a website

What is web scraping?

Scraping is like browsing to a website and copying some content, but it is done programmatically (e.g. using Python) which means that it is much faster. The limit to how fast you can scrape is basically your bandwidth and computing power (and how much the web server allows you to). Technically this process can be divided in two parts:

- Crawling is the first part, which basically involves opening a page and finding all the interesting links in it, e.g. shops listed in a section of the yellow pages.

- Scraping comes next, where all the links from the previous step are visited to extract specific parts of the web page, e.g. the address or phone number.

Challenges of Scraping

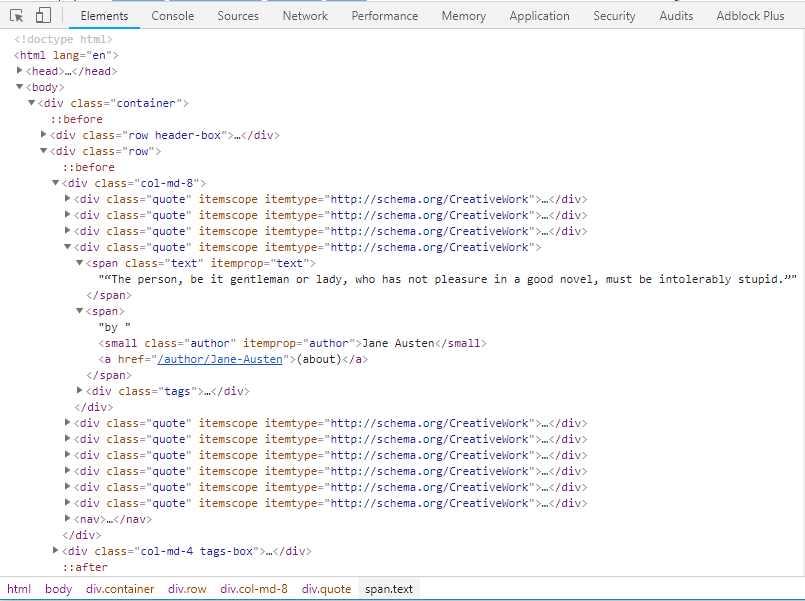

One main challenge is that websites tend to be varied and you will likely end up writing a scraper specific to every site you are dealing with. Even if you stick with the same websites, updates/re-designs will likely break your scraper in some way (you will be using the F12 button frequently).

Some websites do not tolerate being scraped and will employ different techniques to slow or stop scraping. Another aspect to consider is the legality of this process, which depends on where the server is located, the term of service and what you do once you have the data amongst other things.

Introduction To Web Scraping Using

An Alternative to web scraping, when available, are Application Programming Interfaces (APIs) which offer a way to access structured data directly (using formats like JSON and XML) without dealing with the visual presentation of the web pages. Hence it is always a good idea to check if the website offers an API before investing time and effort in a scraper.

Web Scraping Software

Scraping libraries

While there are many ways how to get data from web pages (e.g. using Excel, browser plugins or other tools) this article will focus on how to do it with Python. Having the flexibility of a programming language makes it a very powerful approach and there are very good libraries available such as Beautiful Soup which will be used in the sample below. There is a very good write up on how to Build a Web Scraper With Beautiful Soup. Another framework to consider is Scrapy.

What is Selenium? Why is it needed?

Some websites use JavaScript to load parts of the page later (not directly when the page is loaded). Some links are also calling JavaScript and the URL to go to is computed on click. These techniques are becoming increasingly common (even as an anti-scraping technique) and unfortunately libraries like Beautiful Soup do not handle them well.

In comes Selenium, a framework designed primarily for automated web applications testing. It allows developers to programmatically control a browser using different programming languages. Since with Selenium there is a real browser rendering the page, JavaScript is executed normally and the problems mentioned above can be avoided. This of course requires more resources and makes the whole process slower, so it is wise to use it only when strictly required.

Beautiful Soup and Selenium can also be used together as shown in this interesting article at freecodecamp.org.

Building a first scraper

This first scraper will perform the following steps:

- Visit the page and parse source HTML

- Check that the page title is as expected

- Perform a search

- Look for expected result

- Get the link URL

Two Implementations, using Beautiful Soup and Selenium, can be found below.

Scraper using Beautiful Soup

Scraper using Selenium

Pre-requisites

This is part of a series that goes through all the steps needed to write a script that reads information from a website and save it locally. This section lists all the technologies you should be familiar with and all the tools that need to be installed.

Basic knowledge of HTML

This article series assumes a basic understanding of web page source code including:

- Familiarity with Python 3.x

- HTML document structure

- Attributes of common HTML elements

- Basic JavaScript and AJAX

- CSS classes

- HTTP request parameters

- Awareness of lazy-loading techniques

A good place to start is W3Schools.

Installing Python

As part of the pre-requisites, installing the correct version of Python and pip is required. This setup section assumes a Windows operating system, but it should be easily transferable to macOS or Linux.

Which Python version should one use: Python 2 or 3? This might have been a point of discussion in the past (Python 2.7 is the latest version of Python 2.x and was released in 2010) since the two are not compatible, one had to pick a version. However today (2020) it is safe to go with version 3.x, with the latest stable version at the time of writing being 3.8.

Start by downloading the latest version of Python 3 from the official website. Install it as you would with any other software. Make sure you add python to the PATH as shown below.

To confirm that it was successfully installed open the Command Prompt window and type python, you should see something like the following:

Introduction To Web Scraping Pdf

Installing and using pip

pip is the package installer for Python. It is very likely that it came along with your Python installation. You can check by entering pip -V in a Command Prompt Window, and you should see something like the following:

If pip is not available, it needs to be installed by following these steps:

Best Web Scraping Tools

- Download get-pip.py to a folder on your computer.

- Open a command prompt

- Navigate to the folder where get-pip.py was saved

- Run the following command: python get-pip.py